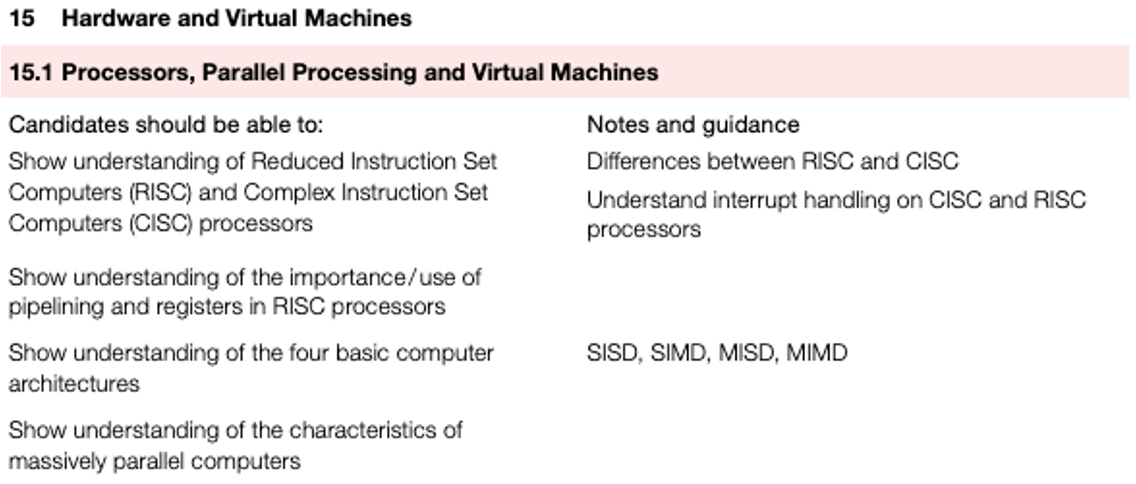

Processors

RISC and CISC processors

Early computers made use of the Von Neumann architecture (see Chapter 4).

Modern advances in computer technology have led to much more complex processor design.

Two basic philosophies have emerged over the last few years:

- developers who want the emphasis to be on the hardware used: the hardware should be chosen to suit the high-level language development

- developers who want the emphasis to be on the software/instruction sets to be used: this philosophy is driven by ever faster execution times.

The first philosophy is part of a group of processor architectures known as CISC (complex instruction set computer).

The second philosophy is part of a group of processor architectures known as RISC (reduced instruction set computer).

CISC processors

- CISC processor architecture makes use of more internal instruction formats than RISC.

- The design philosophy is to carry out a given task with as few lines of assembly code as possible.

- Processor hardware must therefore be capable of handling more complex assembly code instructions.

- Essentially, CISC architecture is based on single complex instructions which need to be converted by the processor into a number of sub-instructions to carry out the required operation.

- For example, suppose we wish to add the two numbers A and B together, we could write the following assembly instruction:

ADD A, B – this is a single instruction that requires several sub-instructions (multi-cycle) to carry out the ADDition operationThis methodology leads to shorter coding (than RISC) but may actually lead to more work being carried out by the processor.

RISC processors

- RISC processors have fewer built-in instruction formats than CISC.

- This can lead to higher processor performance.

- The RISC design philosophy is built on the use of less complex instructions, which is done by breaking up the assembly code instructions into a number of simpler single-cycle instructions.

- Ultimately, this means there is a smaller, but more optimised set of instructions than CISC.

- Using the same example as above to carry out the addition of two numbers A and B (this is the equivalent operation to ADD A, B):

LOAD X, A – this loads the value of A into a register X

LOAD Y, B – this loads the value of B into a register Y

ADD A, B – this takes the values for A and B from X and Y and adds them

STORE Z – the result of the addition is stored in register ZThe differences between CISC and RISC processors

| CISC features | RISC features |

|---|---|

| Many instruction formats are possible | Uses fewer instruction formats/sets |

| There are more addressing modes | Uses fewer addressing modes |

| Makes use of multi-cycle instructions | Makes use of single-cycle instructions |

| Instructions can be of a variable length | Instructions are of a fixed length |

| Longer execution time for instructions | Faster execution time for instructions |

| Decoding of instructions is more complex | Makes use of general multi-purpose registers |

| It is more difficult to make pipelining work | Easier to make pipelining function correctly |

| The design emphasis is on the hardware | The design emphasis is on the software |

| Uses the memory unit to allow complex instructions to becarried out | Processor chips require fewer transistors |

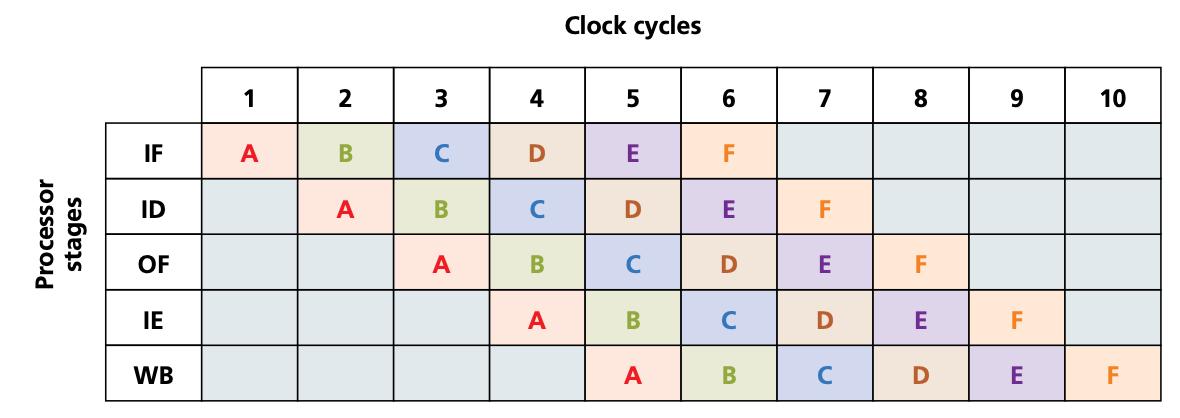

Pipelining

- One of the major developments resulting from RISC architecture is pipelining.

- This is one of the less complex ways of improving computer performance.

- Pipelining allows several instructions to be processed simultaneously without having to wait for previous instructions to be completed.

- To understand how this works, we need to split up the execution of a given instruction into its five stages

- instruction fetch cycle (IF)

- instruction decode cycle (ID)

- operand fetch cycle (OF)

- instruction execution cycle(IE)

- writeback result process (WB).

Interrupts

- Once the processor detects the existence of an interrupt (at the end of the fetch-execute cycle), the current program would be temporarily stopped (depending on interrupt priorities), and the status of each register stored.

- The processor can then be restored to its original status before the interrupt was received and serviced.

- However, with pipelining, there is an added complexity; as the interrupt is received, there could be a number of instructions still in the pipeline.

- The usual way to deal with this is to discard all instructions in the pipeline except for the last instruction in the write-back (WB) stage.

- The interrupt handler routine can then be applied to this remaining instruction and, once serviced, the processor can restart with the next instruction in the sequence.

- Alternatively, although much less common, the contents of the five stages can be stored in registers.

- This allows all current data to be stored, allowing the processor to be restored to its previous status once the interrupt has been serviced.

Parallel processor systems

There are many ways that parallel processing can be carried out.

The four categories of basic computer architecture presently used are described below.

- SISD (single instruction single data)

- SIMD (single instruction multiple data)

- MISD (multiple instruction single data)

- MIMD (multiple instruction multiple data)

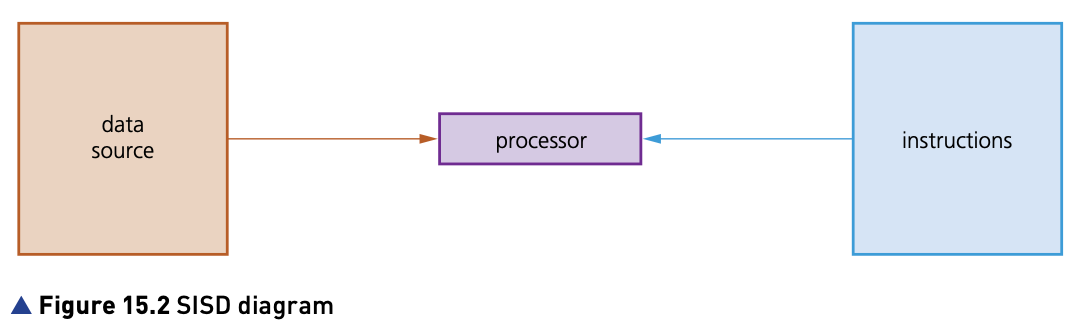

SISD (single instruction single data)

- SISD (single instruction single data) uses a single processor that can handle a single instruction and which also uses one data source at a time.

- Each task is processed in a sequential order.

- Since there is a single processor, this architecture does not allow for parallel processing.

- It is most commonly found in applications such as early personal computers.

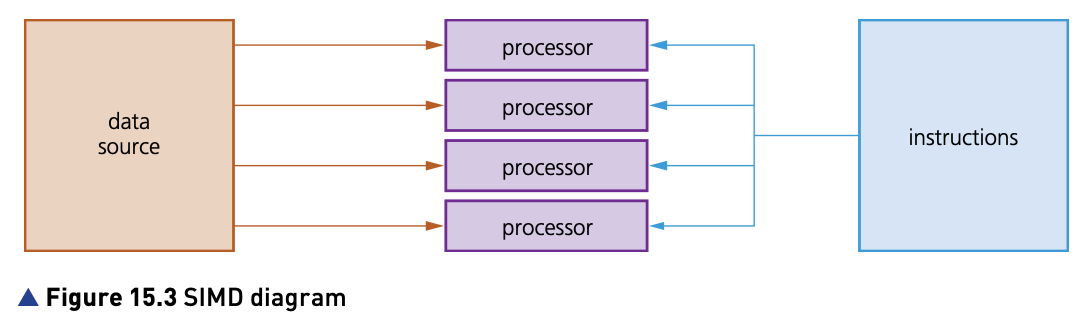

SIMD (single instruction multiple data)

- SIMD (single instruction multiple data) uses many processors.

- Each processor executes the same instruction but uses different data inputs – they are all doing the same calculations but on different data at the same time.

- SIMD are often referred to as array processors; they have a particular application in graphics cards.

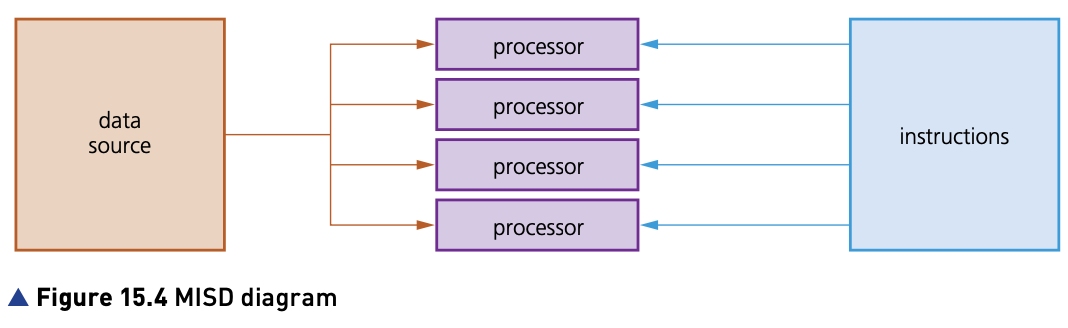

MISD (multiple instruction single data)

- MISD (multiple instruction single data) uses several processors.

- Each processor uses different instructions but uses the same shared data source.

- MISD is not a commonly used architecture (MIMD tends to be used instead).

- However, the American Space Shuttle flight control system did make use of MISD processors.

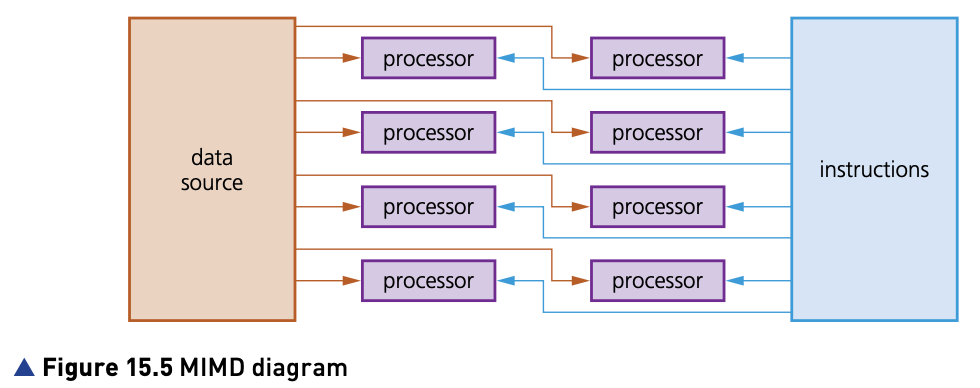

MIMD (multiple instruction multiple data)

- MIMD (multiple instruction multiple data) uses multiple processors.

- Each one can take its instructions independently, and each processor can use data from a separate data source (the data source may be a single memory unit which has been suitably partitioned).

- The MIMD architecture is used in multicore systems (for example, by super computers or in the architecture of multi-core chips).

Parallel computer systems

- SIMD and MIMD are the most commonly used processors in parallel processing.

- A number of computers (containing SIMD processors) can be networked together to form a cluster.

- The processor from each computer forms part of a larger pseudo-parallel system which can act like a super computer.

- Some textbooks and websites also refer to this as grid computing.

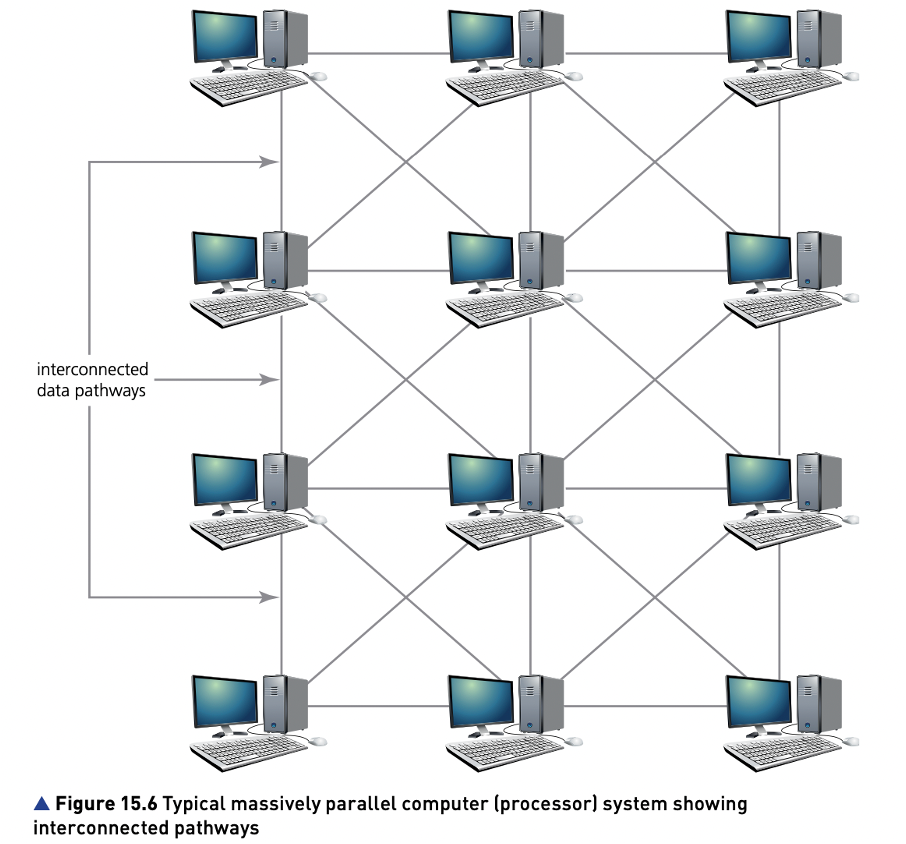

- Massively parallel computers have evolved from the linking together of a number of computers, effectively forming one machine with several thousand processors.

- This was driven by the need to solve increasingly complex problems in the world of science and mathematics.

- By linking computers (processors) together in this way, it massively increases the processing power of the ‘single machine’. - This is subtly different to cluster computers where each computer (processor) remains largely independent.

- In massively parallel computers, each processor will carry out part of the processing and communication between computers is achieved via interconnected data pathways.